Information Transmission

Long about 1996, I read The Text of the New Testament (subtitled Its Transmission, Corruption and Restoration) by Bruce M. Metzger, 3rd edition.

Wow, that edition retails for between $88 and $537 dollars today, 2024-02-22. Should have saved my copy for resale instead of giving it away.

It’s an academic work used as a textbook in christian seminaries. That sounds sleep-inducing, but it’s surprisingly lively. I learned a little bit about classical Greek, how documents were copied one from another, what kind of mistakes regular people made when copying, and what kind of mistakes professional scribes made when copying.

There are still people who hand copy documents. There are people called Sofers, who create Torahs. They know exactly which letter ends a line, and which word ends a block of text. This was not done for the New Testament. If the New Testament is the inerrant word of God, why didn’t God give out some similar mechanism? Some folks seem to believe in a Bible code, which is about 2 steps from a method to double check that it was copied correctly. Why didn’t the New Testament have checksums?

The Classical world had the technology. The Greeks had a system called isopsephy dating from 300 BCE, so the idea of a letter-to-number correspondence wouldn’t hinder use of a checksum. Some checksums (credit card Luhn checksum, or ISBN check digits) are simple algorithmically, requiring nothing more than addition, multiplication and modulo-base-10, which is just the remainder in a division operation.

Some form of checksum would certainly have prevented much of the corruption of the New Testament that Metzger wrote about, right up until the development of the printing press, after which the New Testament would be transmitted perfectly.

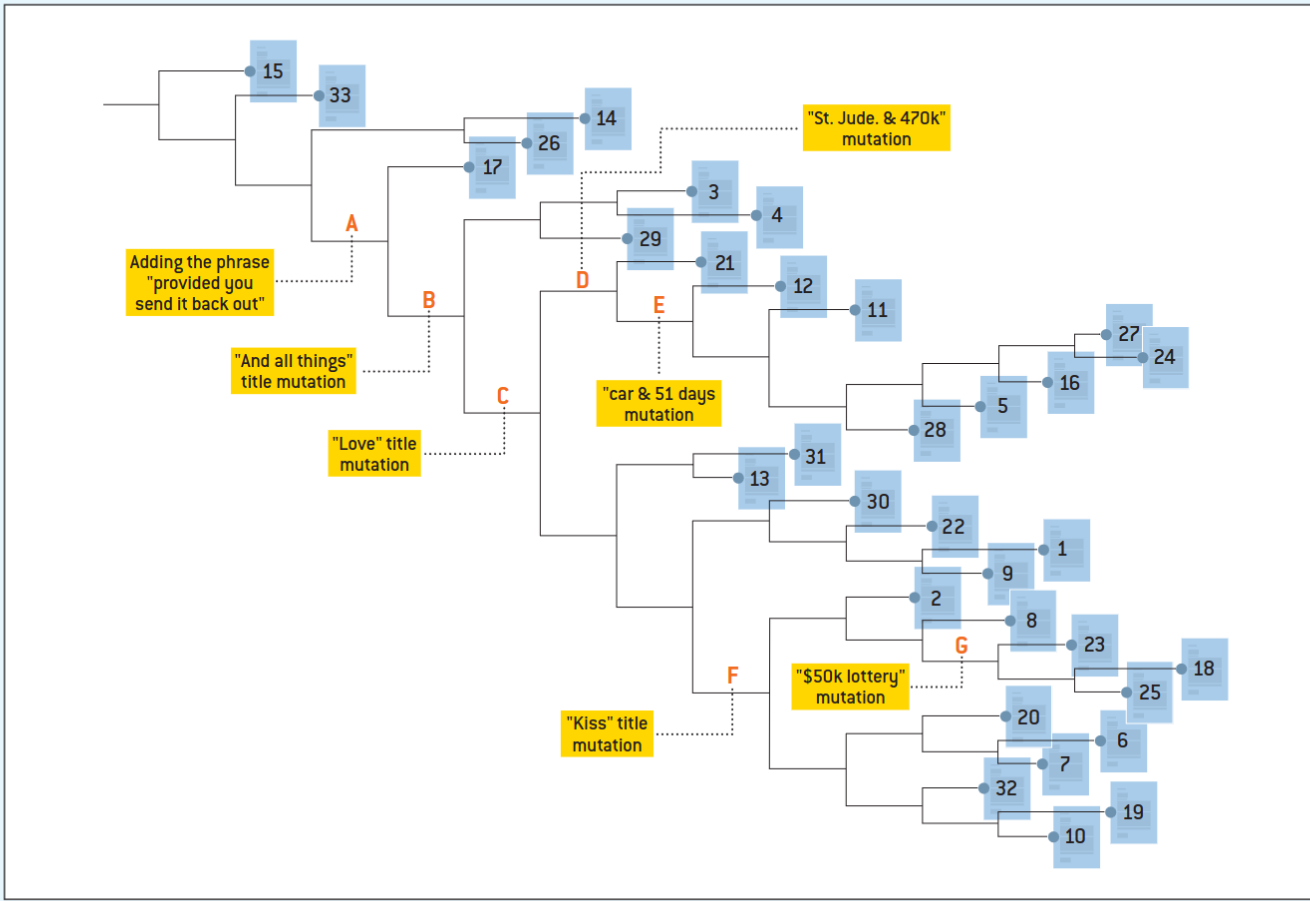

But that’s not true - chain letters underwent evolution well after the printing press was invented, during the age of widespread xerox machine availability. Even with perfect photocopy reproduction, texts changed and evolved.

Ah, but during the late 20th century, we developed digital storage and transmission methods, which do have checksums, we no longer have to concern ourselves with maintaining the perfection of documents. It’s guaranteed.

Even that’s not true.

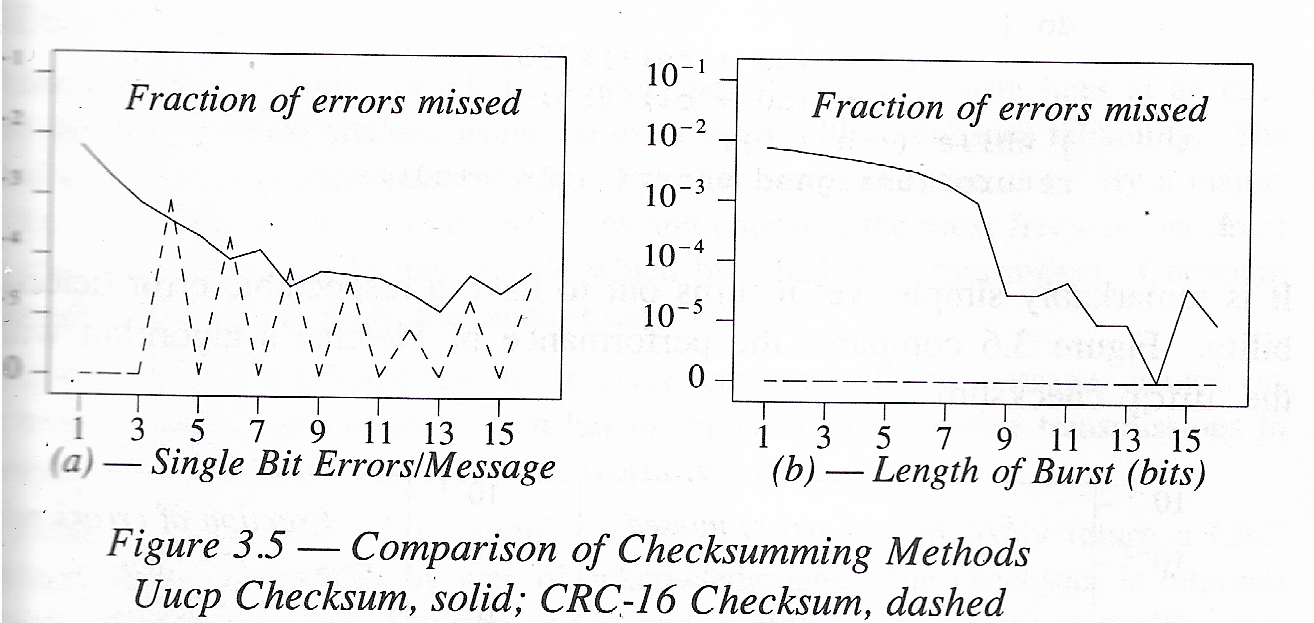

This diagram is from another great book, Design and Validation of Computer Protocols, by Gerard J Holzmann. It shows that some single-bit errors (the kind that can change an ‘A’ to a ‘Q’) can slip past a CRC-16 (16-bit Cyclic Redundancy Check) checksum. The dashed line on the left hand graph shows that 0.1% of 4 non-consecutive bit errors can slip past a CRC-16 double check. Of course, the larger the text over which you calculate a CRC, the more errors per text will slip through.

Then there’s the more esoteric forward error correction codes. Old school audio CDs used Reed-Solomon error correction. Those of us older than a certain age know that Reed-Solomon isn’t even close to perfect.

It seems that printing presses and digital media just slow the decay of documents. Should humans outlive this day, and come safe home to a distant future, we will still have to work at reconstructing the New Testament, and indeed, any knowledge.

This is a major theme of Walter Miller’s science fiction classic A Canticle for Leibowitz, but that’s partially set in a future dark age, and partially in a future renaissance. Few sci fi authors other than Miller have recognized that texts decay with time, and that the uncertainty increases across space and time.

We humans live in an eternal now. All our efforts make the now a little bit longer. Entropy smears the past into misty piles of rubble, and randomness does the same to the future.